Social Sciences

Source: http://blog.impactstory.org/four-great-reasons-to-stop-caring-so-much-about-the-h-index/

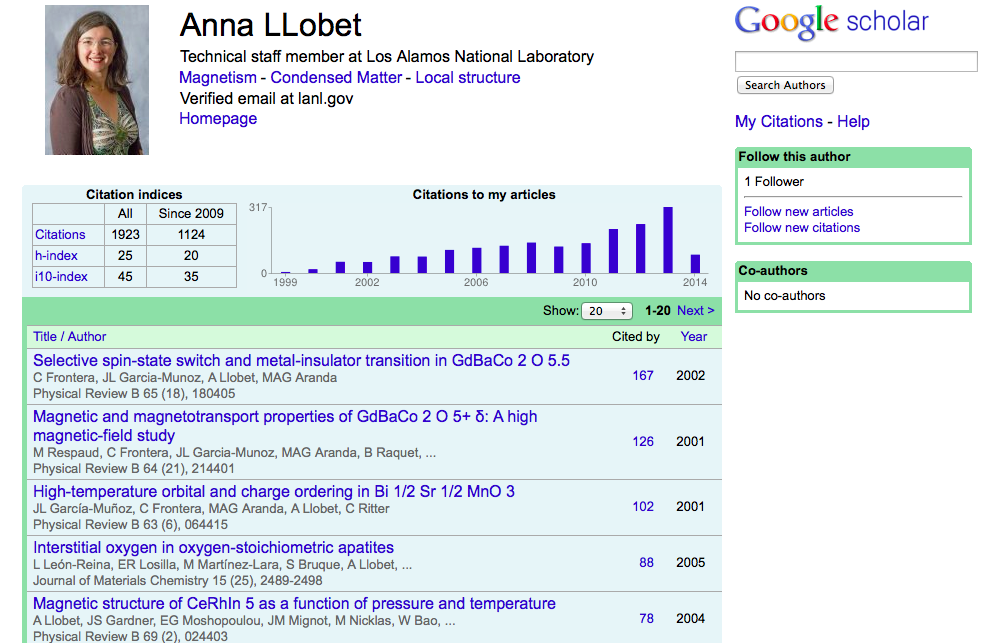

Google Scholar is an obvious first step. You type their name in, find their profile, and?ah, there it is! Their h-index, right at the top. Now you know their quality as a scholar.

Google Scholar is an obvious first step. You type their name in, find their profile, and?ah, there it is! Their h-index, right at the top. Now you know their quality as a scholar.

Or do you?

The h-index

is an attempt to sum up a scholar in a single number that balances

productivity and impact. Anna, our example, has an h-index of 25 because

she has 25 papers that have each received at least 25 citations.

Today, this number is used for both informal evaluation (like sizing

up colleagues) and formal evaluation (like tenure and promotion).

We think that?s a problem.

Well, ?good? depends on several variables. First, what is her field

of study? What?s considered ?good? in Clinical Medicine (84) is different than what is considered ?good? in Mathematics (19). Some fields simply publish and cite more than others.

Next, how far along is Anna in her career? Junior researchers have a

h-index disadvantage. Their h-index can only be as high as the number of

papers they have published, even if each paper is highly cited. If she

is only 9 years into her career, Anna will not have published as many

papers as someone who has been in the field 35 years.

Furthermore, citations take years to accumulate. The consequence is

that the h-index doesn?t have much discriminatory power for young

scholars, and can?t be used to compare researchers at different stages

of their careers. To compare Anna to a more senior researcher would be

like comparing apples and oranges.

Did you know that Anna also has more than one h-index? Her h-index (and yours) depends on which database you are looking at,

because citation counts differ from database to database. (Which one

should she list on her CV? The highest one, of course. :))

like chemistry? Sorry, only articles count toward your h-index. Same

thing goes for software, blog posts, or other types of ?non-traditional?

scholarly outputs (and even one you?d consider ?traditional?: books).

Many have attempted to fix the h-index weaknesses with various computational models that, for example, reward highly-cited papers, correct for career length, rank authors? papers against other papers published in the same year and source, or count just the average citations of the most high-impact ?core? of an author?s work.

Many have attempted to fix the h-index weaknesses with various computational models that, for example, reward highly-cited papers, correct for career length, rank authors? papers against other papers published in the same year and source, or count just the average citations of the most high-impact ?core? of an author?s work.

Four great reasons to stop caring so much about the h-index - Impactstory blog

- Citation Analysis

Source: https://www.york.ac.uk/library/info-for/researchers/citation/ Citation analysis and bibliometricsResponsible metrics - Choose your indicators with care. Don't make inappropriate comparisons. Bibliometrics can be defined as the statistical...

- Citations Mining: "how To Increase H-index " By Nader Ale Ebrahim

Source: http://citationsmining.blogspot.fr/2013/04/how-to-increase-h-index-by-nader-ale.html How to increase h-index Nader Ale Ebrahim, Department of Engineering Design and Manufacture, Faculty of Engineering, University of MalayaAbstractPublishing...

- The Genius And The H-index | The Land Of Algorithms

Source: https://algoland.wordpress.com/2011/05/29/the-genius-and-the-h-index/ The genius and the h-index Last week my friend Sanjoy came in Pisa to visit us and give a three day long seminar. At dinner with a few colleagues, we starting...

- Journal & Author Impact Metrics | University Of Texas Libraries

Source: http://www.lib.utexas.edu/subject/education/journalmetrics Journal & Author Impact Metrics Journal Impact Factor (JIF) What it is: A measure of citations to a journal based on 2 year period. A 2012 impact factor...

- Assessing Scholarly Productivity: The Numbers Game

Source: http://ithacalibrary.com/services/staff/rgilmour Assessing Scholarly Productivity To evaluate the work of scholars objectively, funding agencies and tenure committees may attempt to quantify both its quality and impact. Quantifying...

Social Sciences

Four great reasons to stop caring so much about the h-index - Impactstory blog

Source: http://blog.impactstory.org/four-great-reasons-to-stop-caring-so-much-about-the-h-index/

Four great reasons to stop caring so much about the h-index

You?re

surfing the research literature on your lunch break and find an

unfamiliar author listed on a great new publication. How do you size

them up in a snap?

surfing the research literature on your lunch break and find an

unfamiliar author listed on a great new publication. How do you size

them up in a snap?

Or do you?

The h-index

is an attempt to sum up a scholar in a single number that balances

productivity and impact. Anna, our example, has an h-index of 25 because

she has 25 papers that have each received at least 25 citations.

Today, this number is used for both informal evaluation (like sizing

up colleagues) and formal evaluation (like tenure and promotion).

We think that?s a problem.

The h-index is failing on the job, and here?s how:

1. Comparing h-indices is comparing apples and oranges.

Let?s revisit Anna LLobet, our example. Her h-index is 25. Is that good?Well, ?good? depends on several variables. First, what is her field

of study? What?s considered ?good? in Clinical Medicine (84) is different than what is considered ?good? in Mathematics (19). Some fields simply publish and cite more than others.

Next, how far along is Anna in her career? Junior researchers have a

h-index disadvantage. Their h-index can only be as high as the number of

papers they have published, even if each paper is highly cited. If she

is only 9 years into her career, Anna will not have published as many

papers as someone who has been in the field 35 years.

Furthermore, citations take years to accumulate. The consequence is

that the h-index doesn?t have much discriminatory power for young

scholars, and can?t be used to compare researchers at different stages

of their careers. To compare Anna to a more senior researcher would be

like comparing apples and oranges.

Did you know that Anna also has more than one h-index? Her h-index (and yours) depends on which database you are looking at,

because citation counts differ from database to database. (Which one

should she list on her CV? The highest one, of course. :))

2. The h-index ignores science that isn?t shaped like an article.

What if you work in a field that values patents over publications,like chemistry? Sorry, only articles count toward your h-index. Same

thing goes for software, blog posts, or other types of ?non-traditional?

scholarly outputs (and even one you?d consider ?traditional?: books).

Similarly, the h-index only uses citations to your work

that come from journal articles, written by other scholars. Your h-index

can?t capture if you?ve had tremendous influence on public policy or in

improving global health outcomes. That doesn?t seem smart.

that come from journal articles, written by other scholars. Your h-index

can?t capture if you?ve had tremendous influence on public policy or in

improving global health outcomes. That doesn?t seem smart.

3. A scholar?s impact can?t be summed up with a single number.

We?ve seen from the journal impact factor that single-number impact indicators can encourage lazy evaluation.

At the scariest times in your career?when you are going up for tenure

or promotion, for instance?do you really want to encourage that? Of

course not. You want your evaluators to see all of the ways you?ve made

an impact in your field. Your contributions are too many and too varied

to be summed up in a single number. Researchers in some fields are rejecting the h-index for this very reason.

At the scariest times in your career?when you are going up for tenure

or promotion, for instance?do you really want to encourage that? Of

course not. You want your evaluators to see all of the ways you?ve made

an impact in your field. Your contributions are too many and too varied

to be summed up in a single number. Researchers in some fields are rejecting the h-index for this very reason.

So, why judge Anna by her h-index alone?

Questions of completeness aside, the h-index might not

measure the right things for your needs. Its particular balance of

quantity versus influence can miss the point of what you care about. For

some people, that might be a single hit paper, popular with both other

scholars and the public. (This article

on the ?Big Food? industry and its global health effects is a good

example.) Others might care more about how often their many, rarely

cited papers are used often by practitioners (like those by CG Bremner,

who studied Barrett Syndrome, a lesser known relative of

gastroesophageal reflux disease). When evaluating others, the metrics

you?re using should get at the root of what you?re trying to understand

about their impact.

measure the right things for your needs. Its particular balance of

quantity versus influence can miss the point of what you care about. For

some people, that might be a single hit paper, popular with both other

scholars and the public. (This article

on the ?Big Food? industry and its global health effects is a good

example.) Others might care more about how often their many, rarely

cited papers are used often by practitioners (like those by CG Bremner,

who studied Barrett Syndrome, a lesser known relative of

gastroesophageal reflux disease). When evaluating others, the metrics

you?re using should get at the root of what you?re trying to understand

about their impact.

4. The h-index is dumb when it comes to authorship.

Some physicists are one of a thousand authors on a single

paper. Should their fractional authorship weigh equally with your

single-author paper? The h-index doesn?t take that into consideration.

paper. Should their fractional authorship weigh equally with your

single-author paper? The h-index doesn?t take that into consideration.

What if you are first author on a paper? (Or last author,

if that?s the way you indicate lead authorship in your field.) Shouldn?t

citations to that paper weigh more for you than it does your

co-authors, since you had a larger influence on the development of that

publication?

if that?s the way you indicate lead authorship in your field.) Shouldn?t

citations to that paper weigh more for you than it does your

co-authors, since you had a larger influence on the development of that

publication?

The h-index doesn?t account for these nuances.

So, how should we use the h-index?

None of these have been widely adopted, and all of them

boil down a scientist?s career to a single number that only measures one

type of impact.

boil down a scientist?s career to a single number that only measures one

type of impact.

What we need is more data.

Altmetrics?new

measures of how scholarship is recommended, cited, saved, viewed, and

discussed online?are just the solution. Altmetrics measure the influence

of all of a researcher?s outputs, not just their papers. A variety of new altmetrics tools

can help you get a more complete picture of others? research impact,

beyond the h-index. You can also use these tools to communicate your

own, more complete impact story to others.

measures of how scholarship is recommended, cited, saved, viewed, and

discussed online?are just the solution. Altmetrics measure the influence

of all of a researcher?s outputs, not just their papers. A variety of new altmetrics tools

can help you get a more complete picture of others? research impact,

beyond the h-index. You can also use these tools to communicate your

own, more complete impact story to others.

So what should you do when you run into an h-index? Have fun looking if you are curious, but don?t take the h-index too seriously.

Are you more than your h-index? Email us today at [email protected] for some free ?I am more than my h-index? stickers!Four great reasons to stop caring so much about the h-index - Impactstory blog

- Citation Analysis

Source: https://www.york.ac.uk/library/info-for/researchers/citation/ Citation analysis and bibliometricsResponsible metrics - Choose your indicators with care. Don't make inappropriate comparisons. Bibliometrics can be defined as the statistical...

- Citations Mining: "how To Increase H-index " By Nader Ale Ebrahim

Source: http://citationsmining.blogspot.fr/2013/04/how-to-increase-h-index-by-nader-ale.html How to increase h-index Nader Ale Ebrahim, Department of Engineering Design and Manufacture, Faculty of Engineering, University of MalayaAbstractPublishing...

- The Genius And The H-index | The Land Of Algorithms

Source: https://algoland.wordpress.com/2011/05/29/the-genius-and-the-h-index/ The genius and the h-index Last week my friend Sanjoy came in Pisa to visit us and give a three day long seminar. At dinner with a few colleagues, we starting...

- Journal & Author Impact Metrics | University Of Texas Libraries

Source: http://www.lib.utexas.edu/subject/education/journalmetrics Journal & Author Impact Metrics Journal Impact Factor (JIF) What it is: A measure of citations to a journal based on 2 year period. A 2012 impact factor...

- Assessing Scholarly Productivity: The Numbers Game

Source: http://ithacalibrary.com/services/staff/rgilmour Assessing Scholarly Productivity To evaluate the work of scholars objectively, funding agencies and tenure committees may attempt to quantify both its quality and impact. Quantifying...